You are here: American University Centers IDEAS Institute Musical Artists At IDEAS

Bei Xiao Receives $420,000 Research Grant from National Institute of Health Learning diagnostic latent representations for human material perception

Associate Director, Bei Xiao, received a $420,000 research grant from the National Institute of Health for he project "Learning diagnostic latent representations for human material perception: common mechanisms and individual variability". The project starting date is April 1st, 2023. Visit Funding & Partnerships to view othe grants and awards provided to our faculty and staff.

Abstract: Visually discriminating and identifying materials(such as deciding whether a cup is made with plastic or glass) is crucial for many everyday tasks such as handling objects, walking, driving, effectively using tools, and selecting food; and yet material perception remains poorly understood. The main challenge is that a given material can take an enormous variety of appearances depending on the 3D shape, lighting, object class, and viewing point, and humans have to untangle these to achieve perceptual constancy. This is a basic unsolved aspect of biological visual processing. We use translucent materials such as soap, wax, skin, fruit, meat as a model system. Previous research reveals a host of useful image cues, and finds that 3D geometry interacts with the perception of translucent materials in intricate ways. The discovered image cues are often do not generalize across geometry, scenes, and tasks, and are not directly applicable to images of real-world objects. In addition, the stimuli used are small in scale and limited in their diversity (e.g. most looks like frosted glass, jade and marble). Our long-term objectives are to measure the richness of human material perception across diverse appearances and 3D geometries, and to solve the puzzle of how humans identify the distal physical causes from image features. To achieve this goal, we will take advantage of the recent progress made with unsupervised deep neural networks, combined with human psychophysics, to investigate material perception with a large-scale image data set of real-world materials. Our first specific aim is to identify a latent space that predicts the joint effects of 3D shape and physical material properties on human material discrimination, using unsupervised learning trained with rendered images. We will then investigate how the latent representations in neural networks relate to human perception, such as comparing the model predictions of how 3D geometry affects material appearance. Further, we aim to identify image features that are diagnostic of material appearance with respect to fine 3D geometry. Our second specific aim is to investigate high-level semantic material perception from photographs of real world objects and characterize the effects of high-level recognition on material rating tasks. To discover a compact representation of large-scale real-world images, we train a style-based Generative Adversarial Networks (styleGAN) ona large number of photographs. Our pilot data suggests styleGAN can synthesize highly realistic images and the latent space can disentangle mid-level semantic material attributes (e.g. "see-throughness"). Therefore, the latent space can help us discover diagnostic image features related to high-level material attributes and individual difference. By manipulating the stimuli using the disentangled dimensions in the latent space, we will measure the effects of global properties (e.g. object) and local features (e.g. texture) on material perception and test the hypothesis that the individual differences we found in a preliminary study come from the individual variability in high-level scene interpretation. Collectively, these findings will allow us to examine the basic assumptions of mid-level vision by uncovering task-dependent interplay between high-level vision and mid-level representations, and provide further guidance for seeking neural correlates of material perception. The methods developed in this proposal such as comparing human and machine learning models and characterizing individual variability, will impact to other research questions in perception and cognition. The AREA proposal provides a unique multidisciplinary training opportunity for undergraduate students in human psychophysics, machine learning, and image processing. The PI and the students will also investigate novel method of recruiting under-represented human subjects such as "peer-recruiting".

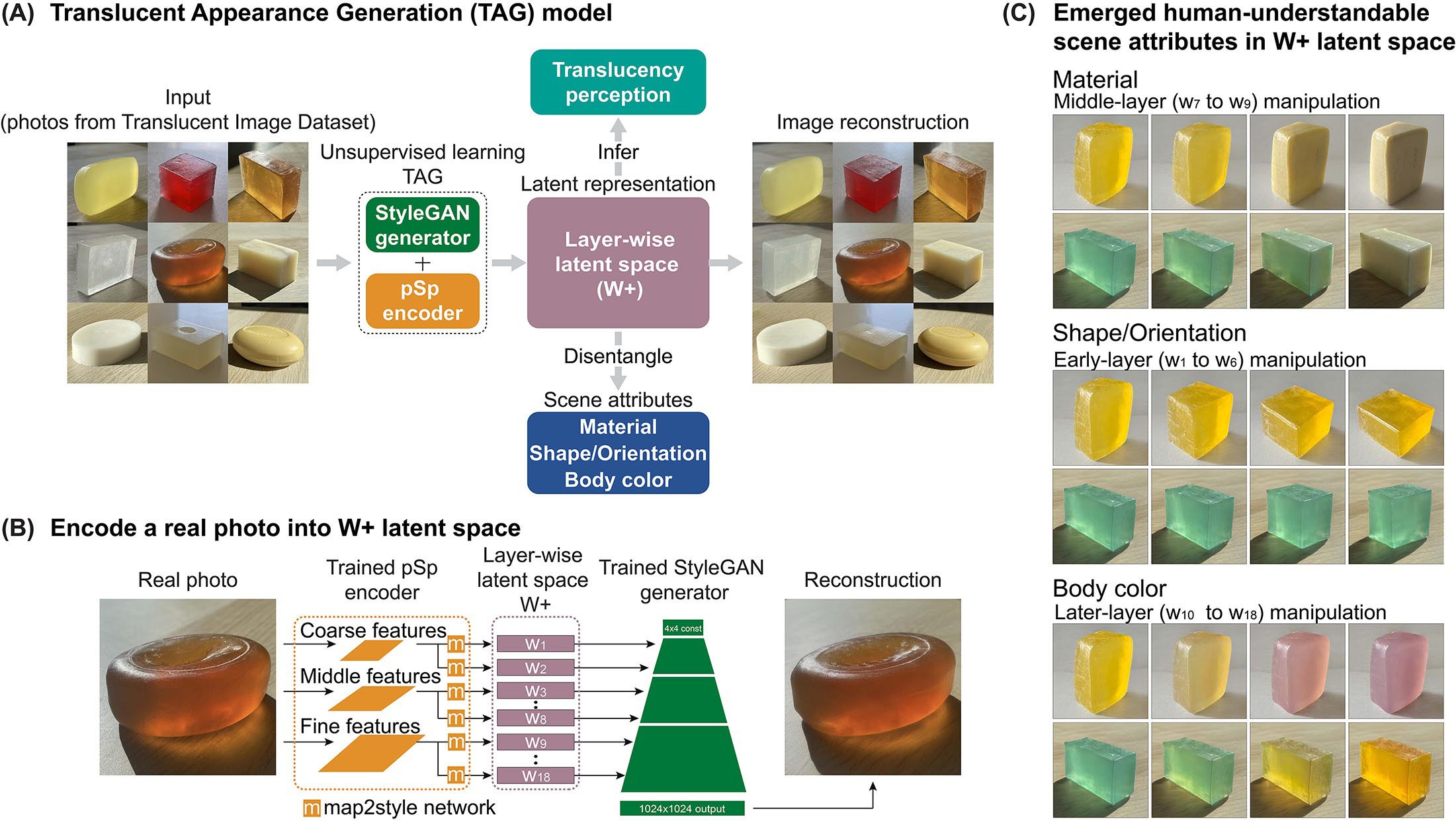

The Translucent Appearance Generation (TAG) model using StyleGAN. Given inputs of natural images, the TAG framework, which is based on the StyleGAN2-ADA generator and pSp encoder architectures, learns to synthesize perceptually convincing images of translucent objects.

The Translucent Appearance Generation (TAG) model using StyleGAN. Given inputs of natural images, the TAG framework, which is based on the StyleGAN2-ADA generator and pSp encoder architectures, learns to synthesize perceptually convincing images of translucent objects.

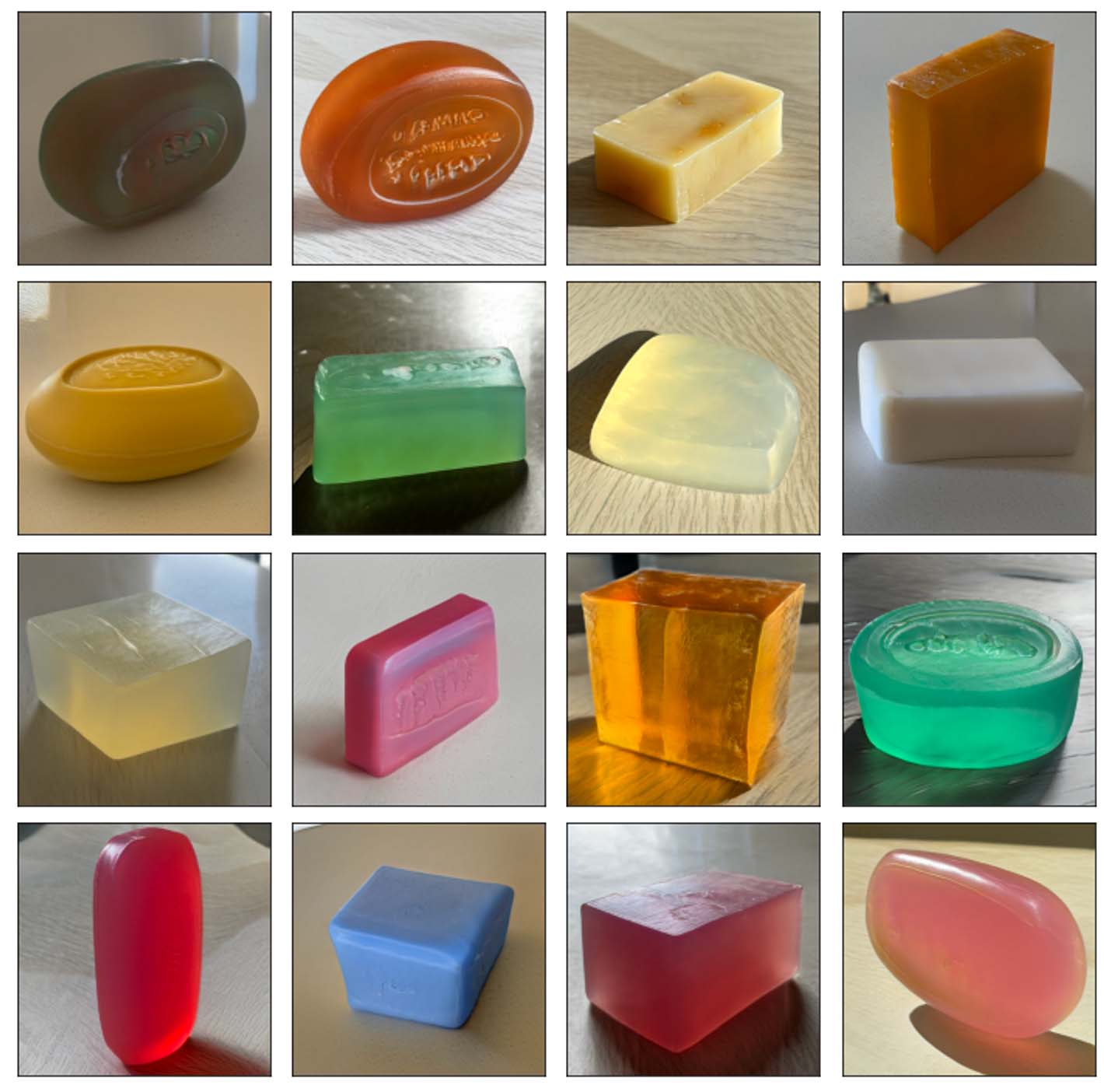

The generated images of Soaps using our TAG model.

The generated images of Soaps using our TAG model.

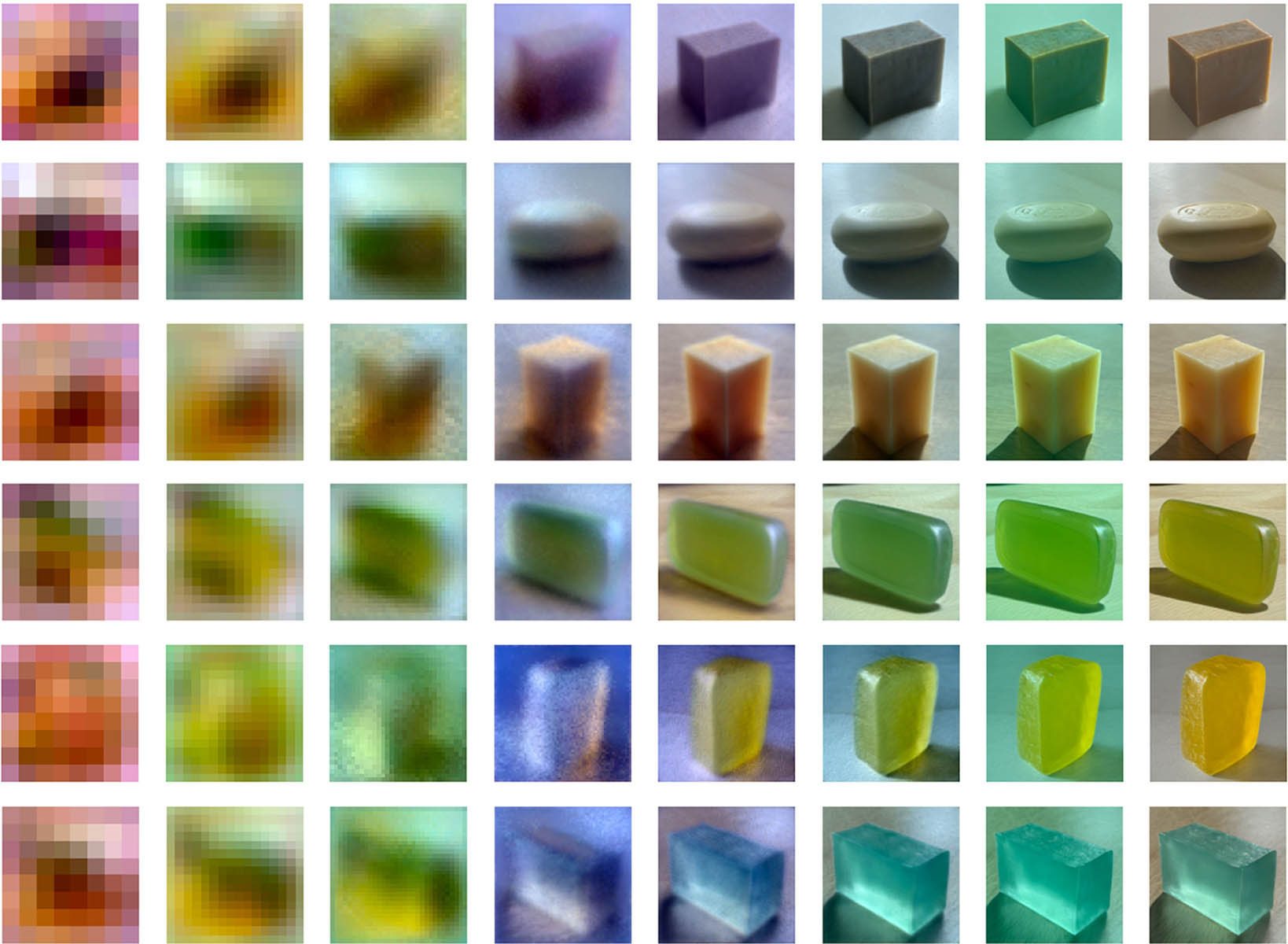

Translucency is an essential visual phenomenon. The high variability of translucent appearances poses a challenge to understanding the perceptual mechanism. Via unsupervised learning, this work proposed an image-computable framework that discovers a multiscale latent representation, which can disentangle scene attributes related to human translucency perception. Navigating the learned latent space enables coherent editing of the translucent appearances. Liao et al. discovered that translucent impressions emerge in the images' relatively coarse spatial scale features (columns 3 and 4), suggesting that learning the scale-specific statistical structure of natural images may facilitate the representation of material properties across contexts. Liao et al 2023

Translucency is an essential visual phenomenon. The high variability of translucent appearances poses a challenge to understanding the perceptual mechanism. Via unsupervised learning, this work proposed an image-computable framework that discovers a multiscale latent representation, which can disentangle scene attributes related to human translucency perception. Navigating the learned latent space enables coherent editing of the translucent appearances. Liao et al. discovered that translucent impressions emerge in the images' relatively coarse spatial scale features (columns 3 and 4), suggesting that learning the scale-specific statistical structure of natural images may facilitate the representation of material properties across contexts. Liao et al 2023