Will Human Writing Survive in an Era of Generative AI?

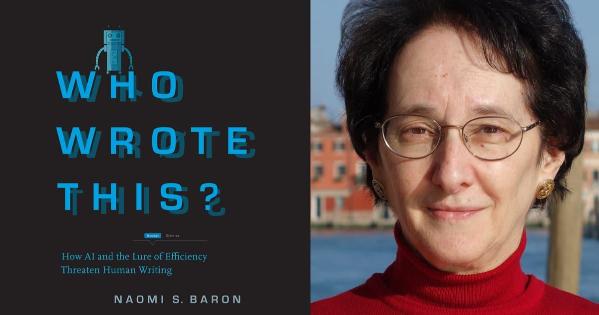

For more than 30 years, College of Arts and Sciences Professor Emerita and linguist Naomi S. Baron has explored how technology shapes the way we speak, read, write and think. As society moved from reading in print to online, Baron surveyed university and secondary school students on their perceptions and practices reading in print versus digitally. Her results ran counter to many assumptions about young “digital natives” when she found that, in many instances, they preferred reading physical books to reading words onscreen. Her new book, Who Wrote This? argues for the importance of human writing and to beware ceding more of our writing to generative AI. “We risk losing out on the power of writing to clarify our thinking and to express ourselves in our own voice,” Baron said.

We will soon approach the one-year anniversary of ChatGPT’s launch into the world. Many educators who were against the platform have changed their thinking; some are guided by explicit policies, and others not. Do you have recommendations going forward?

At least for the next few years, we’ll continue seeing wide variance in educational approaches to generative AI. The reason has less to do with the technology than the fact that education in the United States is decentralized. States – and sometimes cities or school districts – set the rules for much of their curricula. In colleges and universities, especially private ones, pedagogical decision-making often rests at the level of individual instructors. From everything I hear, most faculty are still struggling to make sense of the power and perils of tools like ChatGPT. Understanding best precedes policymaking.

You have conducted studies of university students (one before and another after the release of ChatGPT), assessing their attitudes towards use of AI as an editing or writing aid. A number of respondents mentioned that tools like predictive texting stifled their personal writing voice. Regarding ChatGPT, some were adamant they won’t use the program for writing that was personally important to them, including poetry and love letters. Do you think younger users set similar limits?

In research my colleagues and I have done on reading in print versus digitally, we found highly similar attitudes towards the two media among secondary school and university students. Does the same congruency hold for students’ concerns about shielding their personal writing voice from AI encroachment?

To find out, you might start by thinking about the kinds of writing skills that, say seventh graders, 10th graders and college seniors are likely to have developed. Students in all those cohorts are potentially absorbed readers, which is partly why my reading research found strong similarities across ages. But seventh graders likely have far less proficiency than college seniors in distinguishing their own writing voice from written work they encounter. Discerning different writing styles is generally a taught skill. So is developing your own writing voice, including discovering ways of creatively stretching the boundaries of more workaday prose.

When it comes to ChatGPT, the description most commonly given for text it produces is “beige,” meaning bland. Large language models work by predicting the next word in a sentence, and items bubbling to the top in its data set are the most frequent ones in the language. I suspect younger users will be less likely than more advanced students to identify differences between straightforward language and writing that is more stylistically interesting and personal.

You have great advice for how everyday writers can use GPT as a co-pilot. When should GPT be turned off?

Ultimately it’s up to individual users when to use AI tools in their writing. The exception, of course, is when policies explicitly bar GPT-generated text. My biggest concern is that using AI for text editing and generation can lead to deskilling. The more we rely on AI tools, the less we retain motivation to be able to write and edit on our own. By analogy, think of foreign language skills. Many of us invested years memorizing vocabulary and grammar rules of another language. But if we don’t keep using our Italian or Japanese or whatever, our abilities tend to fade.

Integration of Open AI’s powerful large language model GPT-4 into Microsoft products will surely mean that business writing tasks will increasingly be done by bots. Yet everyday Microsoft users already see text being predicted for them, along with persistent recommendations to change their grammar or word choice. Some writers are sufficiently confident to ignore suggestions they don’t wish to accept. However, the temptation to acquiesce can be strong, especially for less confident writers. Another problem: At least as of now, sometimes Microsoft’s suggestions are flat-out wrong.

Should there be specific types of writing for which an AI co-pilot is particularly inappropriate? One recent trend that sticks in my craw is having AI write your personal wedding vows. But each to his or her own taste!